by Andrew Y. Chin, Head—Investment Solutions and Sciences & Yuyu Fan, Principal Data Scientist—Investment Solutions and Sciences, AllianceBernstein

A richer dialogue between human experts and large language models may improve outcomes.

Artificial intelligence and human talent are intertwined in investment research today, a symbiotic relationship that seems likely to last as long as research itself. The quest to unlock outperformance through distinctive analysis is the bedrock of traditional fundamental and quantitative research, and we believe that AI is strengthening that foundation.

Large language models (LLMs) are a key part of the AI toolkit. Parsing vast amounts of unstructured data, news and reports at a speed and scale beyond human capabilities, they extract pertinent financial insights, market trends and potential investment signals—sharing them in high-quality, human-like text. LLMs are essentially well-read, highly knowledgeable associates available to analysts 24/7.

In order for research analysts and portfolio managers to harness LLMs’ full potential in making more informed and more timely investment decisions, it’s necessary to ask the right questions and provide the right instruction. In doing so, analysts must amplify one of their own abilities: communication.

That’s where prompt engineering comes in: crafting precise queries that direct LLMs to generate the most relevant and accurate insights.

Prompt Engineering: Leading the Conversation

Put simply, an LLM is on its best behavior when analysts furnish well-crafted prompts. This critical series of carefully designed questions or instructions provides models with needed context, clarity on the specific task being requested and specifications for the output.

It’s crucial to clearly define stakeholder expectations and specific requirements from the start. This can be accomplished by applying patterns or techniques to improve the effectiveness of prompts, making them more consistent and more scalable—effective across multiple uses. This process is typically iterative, with analysts refining prompts based on the feedback and results from the LLM.

This process can take considerable bandwidth from both analysts and data scientists—and require a healthy dose of trial and error. But given the stakes, we think the investment of time and effort is capital well spent, with a potentially powerful payoff in more targeted, more reliable and more meaningful insights from LLMs that may enhance investment outcomes.

Understanding Prompt-Engineering Techniques

What are some of the methods in prompt engineering that may reduce the amount of “capital” that needs to be invested in interacting with an LLM, while keeping its output more on point? The real-world investment applications can be diverse and complex—and proprietary!—but a few basic hypothetical examples can shed some light.

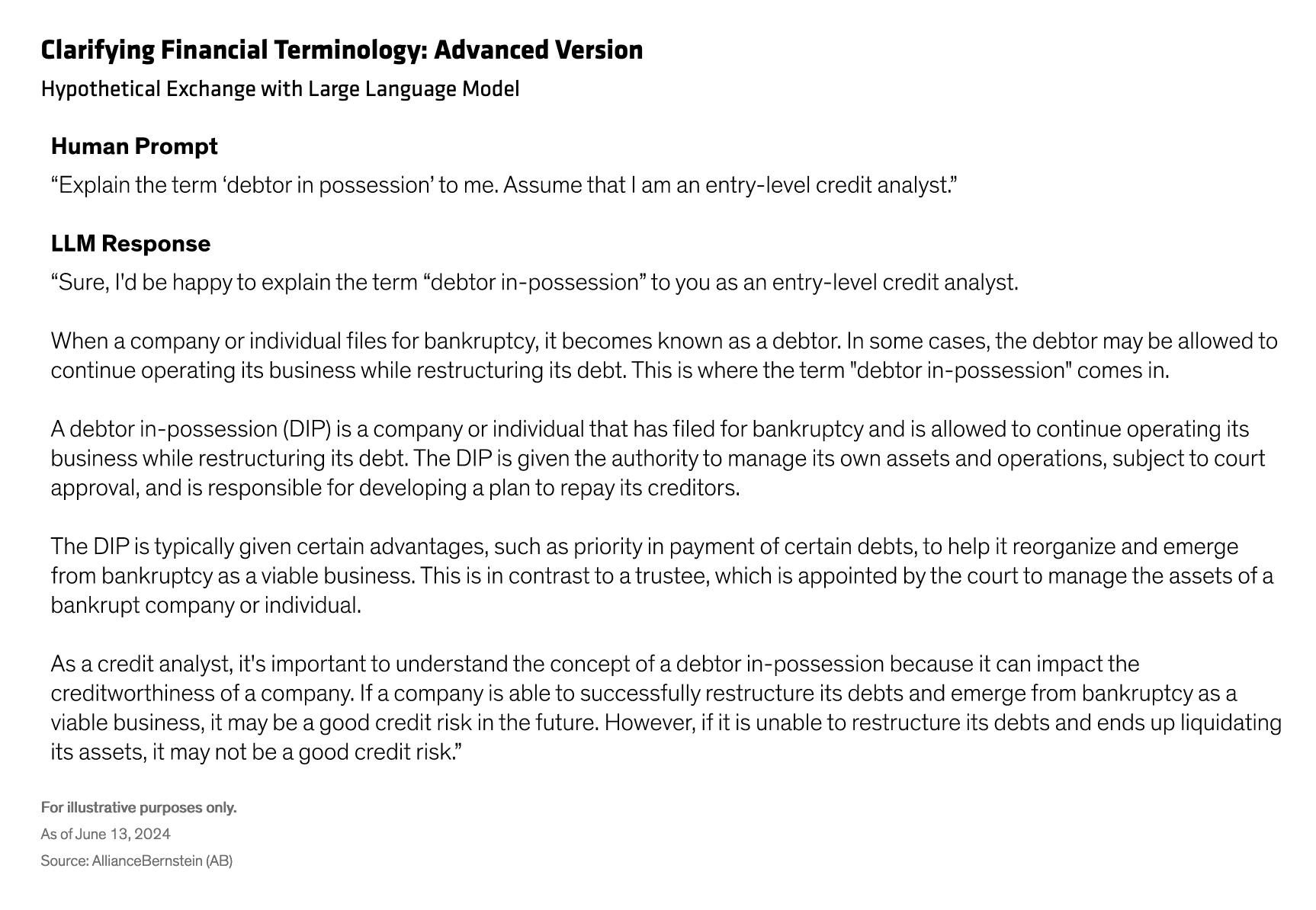

Imagine you’re an entry-level credit analyst reviewing a loan document, and you want to double-check your understanding of the term “debtor in possession.” Asking the LLM a direct question such as “Explain the term ‘debtor in possession’ to me” may produce the following response:

“Debtor in-possession (DIP) is a term used in bankruptcy law to refer to a company or individual that has filed for bankruptcy and is allowed to continue operating their business while they restructure their debts. The DIP is given the authority to manage their own assets and operations, subject to court approval, and is responsible for developing a plan to repay their creditors. This is in contrast to a trustee, who is appointed by the court to manage the assets of a bankrupt company or individual. The DIP is typically given certain advantages, such as priority in payment of certain debts, to help them reorganize and emerge from bankruptcy as a viable business.”

While this explanation might be useful to some people, it may not delve as deeply into the topic as an investment expert would require. Eliciting that level of response requires more clarification as to the intended audience. Armed with the context that the query is coming from a credit analyst, the LLM can provide a more detailed response for someone with greater financial acumen (Display).

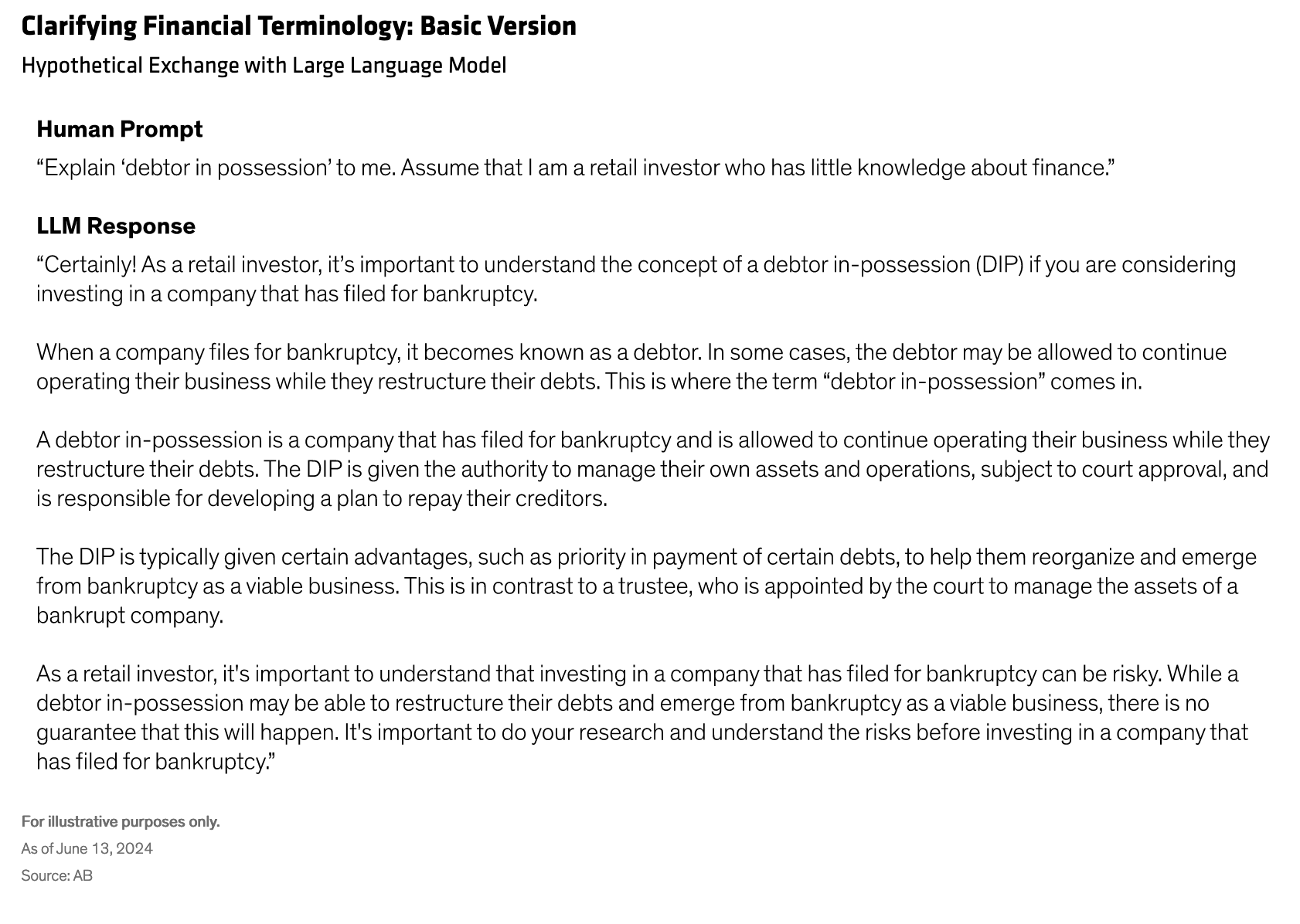

Adjusting the targeted audience works in the other direction, too. If a simpler response than the original output were required, perhaps for use with a different audience, the prompt could be reengineered to satisfy an individual investor with much less financial literacy (Display).

By engineering the prompt to cater to different levels of financial sophistication, the LLM quickly adapts its language and depth of content. In a similar way, telling the LLM itself to play a specific role—such as a portfolio manager, research analyst or risk analyst—in performing a task will likely lead to responses better aligned with the expected functions of that role.

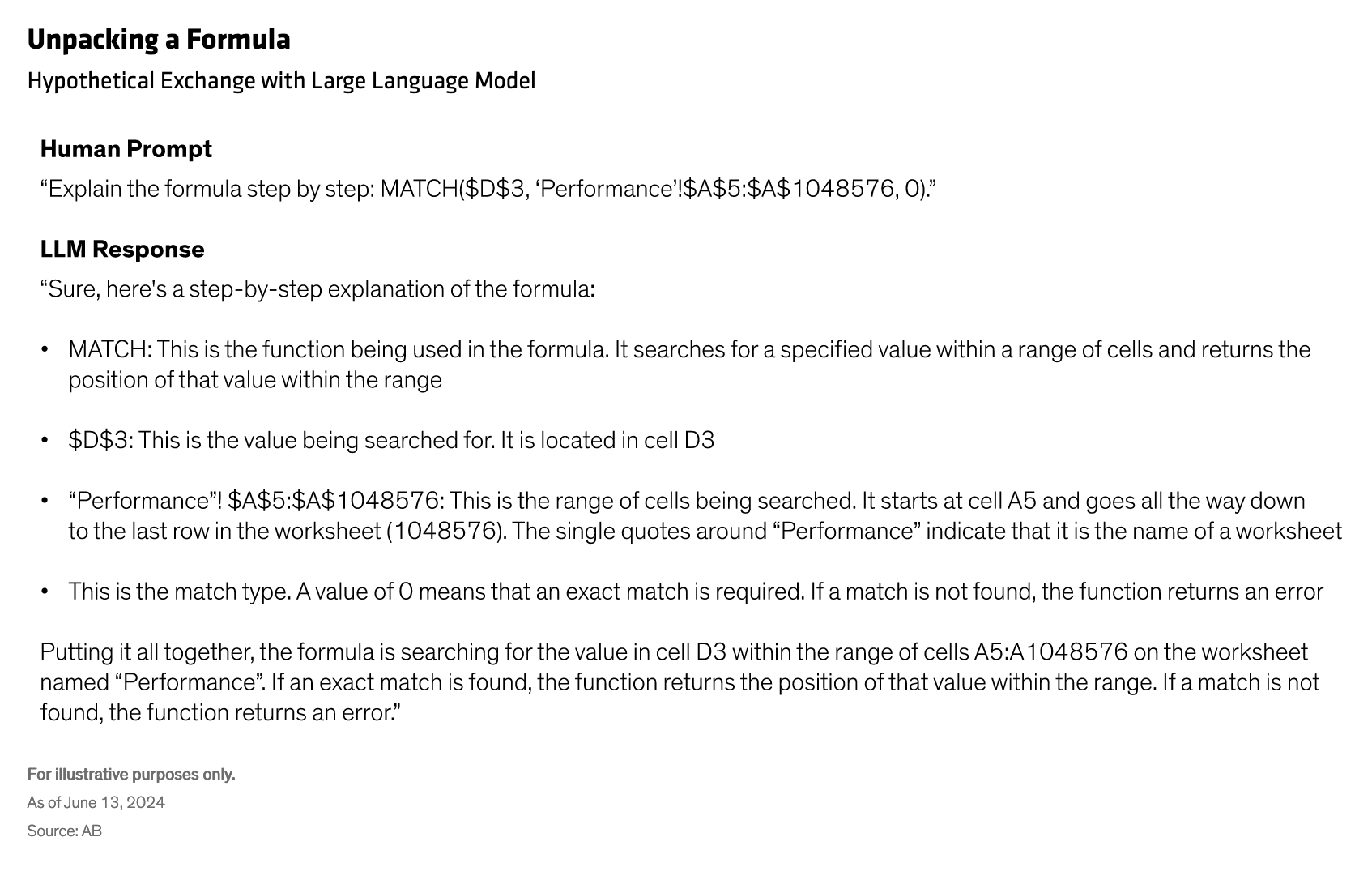

“Chain-of-thought” prompting, another common technique, involves decomposing a complex query into a sequence of simpler, logical steps and guiding the LLM to process each step individually. We’ve found that this technique enhances both the depth and clarity of responses and can be accomplished in a straightforward way by explicitly instructing LLMs to approach tasks “step by step.”

To use a simple example, if you were seeking to understand how a formula in an Excel cell calculates its value, you might ask the model to provide a step-by-step explanation, resulting in a clear, detailed explanation of each part of the formula followed by a brief summary (Display).

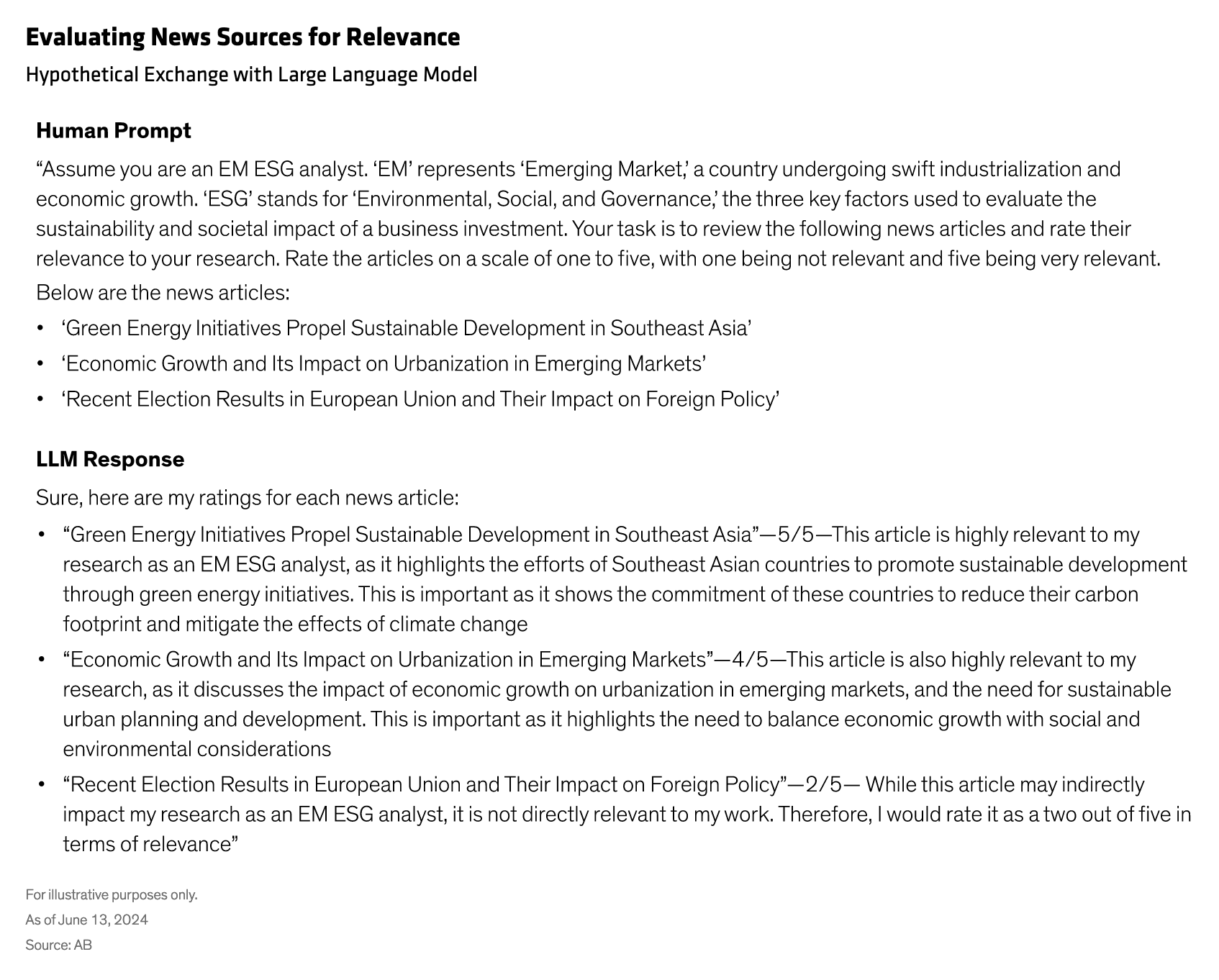

“Step-back” prompting is another useful technique, particularly when dealing with specialized financial terms and acronyms that can be clarified while prompting an LLM.

Let’s assume that an emerging market (EM) analyst is assessing countries’ environmental, social and governance (ESG) policies, which involves reviewing numerous news sources. Taking a step back to first clarify the acronyms EM and ESG enables the model to better focus on the detailed instructions for review—in this case, ranking hypothetical news titles for their relevance to the research effort (Display).

Beyond these hypothetical articles, it’s easy to envision pointing an LLM directly to news sites themselves to uncover the most relevant articles and rank them, and even to summarize the news by country while gauging the related sentiment. In the fast-paced world of investing, it’s not hard to see the efficiency and time savings for human experts—as well as the potential to extract valuable insights.

From a big-picture view, we think prompt engineering enables human analysts to leverage the power of LLMs more effectively. Clear and precise instructions mark the pathway to more relevant and more accurate output. But LLMs are imperfect. Analysts must verify the outputs, because even thoughtful prompts may produce inaccurate or biased results, depending on the model’s training data and inherent limitations.

As we see it, the combination of seasoned research analysts and LLMs informed by sound prompt engineering may generate more effective and more efficient insights that have the potential to enhance performance. It takes time and effort to refine this relationship through clear direction and guidance. Whether it’s an LLM or a team of talented associates, we believe the investment is worth it.

About the Authors

Andrew Chin is Head of Investment Solutions and Sciences and a member of the firm’s Operating Committee. In this leadership role, he oversees the research, management and strategic growth of the firm’s strategic asset allocation, data science, indexing and custom beta businesses. Previously, Chin was the head of quantitative research and chief data scientist. In that capacity, he was responsible for optimizing the quantitative research infrastructure, tools and resources across the firm’s investing platforms. In 2015, Chin created and has since led the firm’s data science strategy to harness big data and leverage machine learning techniques to improve decision-making across the organization. From 2009 to 2021, he was the firm’s chief risk officer, where, in addition to his quantitative research responsibilities, he led all aspects of risk management and built a global team to identify, manage and mitigate the various risks across the organization. Chin has held various leadership roles in quantitative research, risk management and portfolio management in New York and London since joining the firm in 1997. Before joining AB, he spent three years as a project manager and business analyst in Global Investment Management at Bankers Trust. Chin holds a BA in math and computer science, and an MBA in finance from Cornell University. Location: New York

Yuyu Fan is a Principal Data Scientist on the Investment Solutions and Sciences team. In this role, she leverages statistical, machine-learning and deep-learning models to draw insights from financial data. Prior to joining AB in 2018, Fan worked at College Board as a psychometrician intern, using machine-learning models to monitor test validity, reliability and security. She holds a BA in sociology from Zhejiang University (Hangzhou, China), MAs in sociology and psychology from Fordham University, and a PhD in psychometrics and quantitative psychology from Fordham University. Location: New York

Copyright © AllianceBernstein