by Frank Picerno, Capital Spectator

It’s hard to overestimate the power of volatility for monitoring, modeling and forecasting risk in the art/science of portfolio management. The challenge is deciding what to focus on. Even the seemingly simple task of defining volatility is complicated since the signals can vary rather substantially at times when analyzing markets through, say, a prism of standard deviation of return vs. a trading range of an asset’s price. As a general description, I’m fond of Professor Ser-Huang Poon’s reference in his book A Practical Guide to Forecasting Financial Market Volatility![]() : “the spread of all likely outcomes of an uncertain variable.” In any case, bringing order to what can be a black hole of possibilities is essential in this corner of risk management. The potential for genuine insight is considerable, but we almost never reach the promised land without a fair amount of analysis. Perhaps the first rule of extracting the maximum amount of information from the ebb and flow of market volatility is choosing objectives and then figuring out the best path for success.

: “the spread of all likely outcomes of an uncertain variable.” In any case, bringing order to what can be a black hole of possibilities is essential in this corner of risk management. The potential for genuine insight is considerable, but we almost never reach the promised land without a fair amount of analysis. Perhaps the first rule of extracting the maximum amount of information from the ebb and flow of market volatility is choosing objectives and then figuring out the best path for success.

This is tougher than it sounds. Volatility as a broad concept is a fertile resource, offering the intrepid analyst a spectrum of opportunities. The mistake is trying to gorge yourself in one fell swoop. Another blunder is thinking that there’s a silver-bullet solution for the perspective you seek. But one size doesn’t fit all here. As an example, let’s zero in on a relatively narrow but crucial objective: looking for early warning signs that a crash/correction is near.

There are several well-established empirical facts about volatility, and this relative clarity serves us well in our quest for perspective. Perhaps the most valuable lessons in the historical record is that a) volatility exhibits a degree of mean reversion; and b) high (low) volatility is associated with negative (positive) returns in real time. That’s hardly the equivalent of handing us the keys to the kingdom, but it’s enough to make a serious dent in the uncertainty that otherwise prevails for the untrained eye. One of the possibilities is looking for compelling signs that the market may be on the cusp of a hissy fit by monitoring the potential for a surge in vol from comparatively low levels.

As a simple example, let’s focus on the last major equity market crash: the fall of 2008. It’s easy to see that volatility spiked during this event. The question is whether there was any evidence of the approaching collapse in stock prices early on? The balancing act, of course, is developing a system that’s sufficiently sensitive to provide timely warnings while minimizing the number of false signals. It’s quite easy to develop robust tools for one or the other. The challenge is finding the right set of modeling parameters that deliver both. Perfection will forever be elusive, but we can still make quite a lot of progress. This is where some folks get confused, sometimes to the point of shunning this branch of risk analysis completely. But ask yourself a question: Would you stop reading hurricane forecasts simply because there are no guarantees of accuracy?

Meanwhile, minds will differ on the details for modeling vol, depending on one’s objectives and the market under scrutiny. Suffice to say, it’s crucial to spend time with the data and explore the possibilities. There are no “right” or “wrong” modeling choices per se because every investor should customize the risk analysis to match the portfolio, investment objective, and so on.

With that in mind, let’s roll through a quick test and review how several definitions of return-based vol stack up for the US stock market in the fateful month of September 2008. Recall that this was the month that all hell broke loose in markets and macro. By many if not most accounts the precipitating event was the collapse of Lehman Brothers. The investment bank filed for bankruptcy protection on September 15—the largest bankruptcy to date in US history, valued at some $600 billion at the time. In any case, Mr. Market went into a tailspin soon after, the likes of which we haven’t seen since the Great Depression in the 1930s. For obvious reasons, having a bit of intel about the blow that followed in the stock market would have been enormously helpful.

We can test what volatility was telling us at the time by looking to a variety of metrics. For instance, let’s review the S&P’s volatility record through the eyes of five definitions of volatility that reflect an assortment of methodologies for measuring market risk, each with their own set of pros and cons.

1. Trailing 30-day standard deviation of daily return, which is widely recognized as the conventional if not necessarily the best definition of volatility. The choice of trailing period is subjective, but 30 days is reasonable if the goal is generating timely signals that balance the problems of short-term noise with warnings that arrive too late for practical application.

2. The 30-day exponential moving average of the stock market’s squared daily return, which Kent Osband recommends as a simple but robust choice for defining vol (see Chapter 11 in Pandora’s Risk: Uncertainty at the Core of Finance

, for instance.)

3. The VIX, a widely followed measure of the implied volatility of the S&P 500 for the near term.

4. A range-based volatility estimator that incorporates open-high-low-close price data according to the methodology outlined in Garman and Klass (1980) via the TTR package in R.

5. A univariate GARCH(1,1) model that assumes a fat-tail distribution (Student’s T) for estimating volatility via the rugarch package in R.

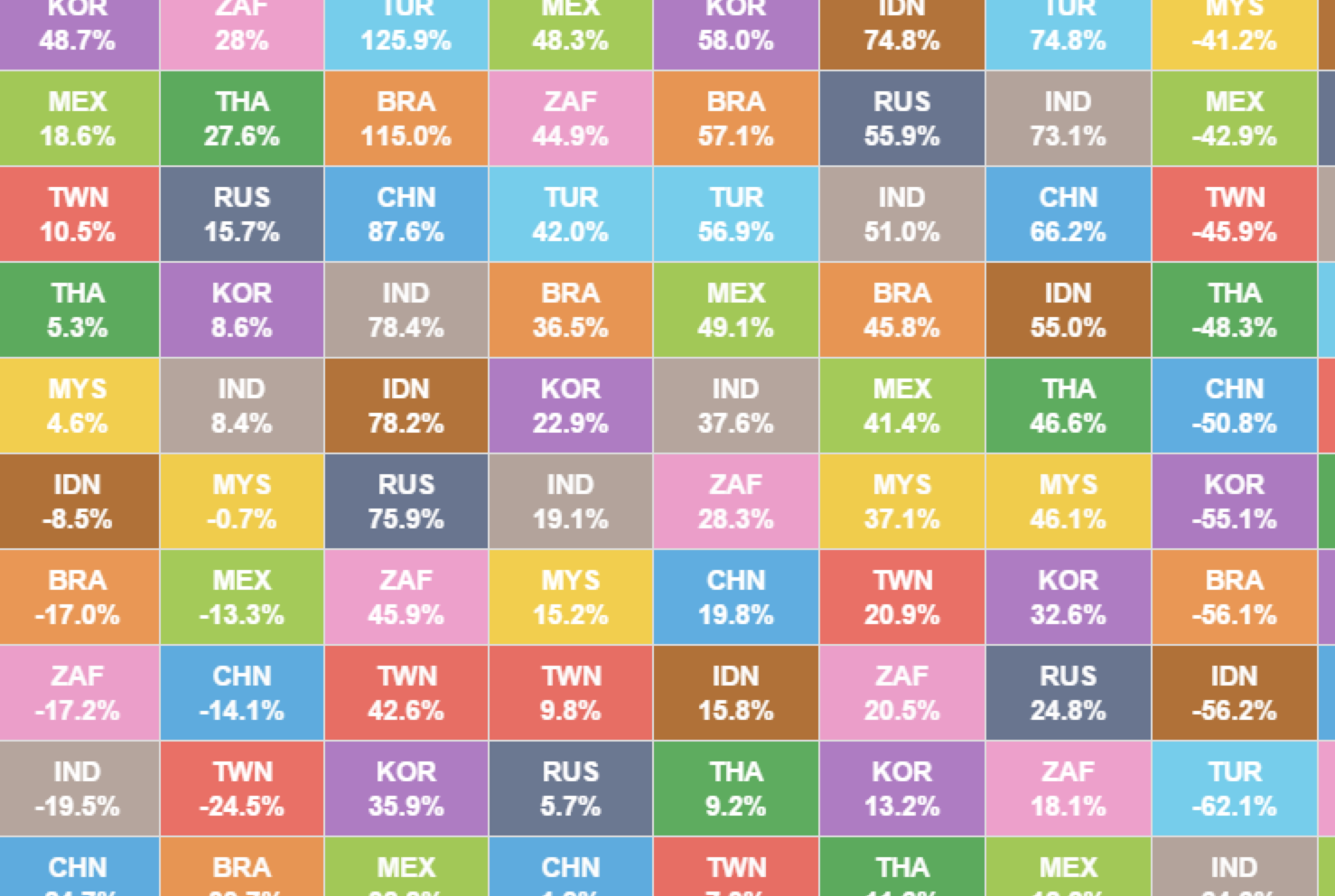

In all cases, I transform the output into percentile rankings (PR) for two reasons. One, PR provides an apples-to-apples comparison for analyzing the results. Two, PR gives us an objective yardstick for interpreting the signals. PR values range from 1 to 100, with 100 indicating that the underlying data is at the highest level recorded for the sample period, which, by the way, dates to the 1950s for S&P figures analyzed here.

In this test, let’s say that when the when a volatility metric reaches or exceeds the 95th percentile, we have a clear sign that risk is elevated in a meaningful degree. That’s a reasonable assumption since we know that a regime shift with vol, moving from low to high, is associated with transitions from bull to bear markets. Not surprisingly, our five risk measures increasingly warned of danger as September unfolded:

The challenge is finding relatively reliable warnings that arrive early. It’s a bit hard to see the details on timing in the chart so let’s turn to the data:

Note that the Garch (1,1) signal dispensed the first warning, on September 10—five days ahead of the Lehman’s bankruptcy (indicated by yellow bar)—in terms of the raw data rising to or above the 95th percentile rank. A follow-up signal arrived the next day. (Note that these signals, using daily figures, were generated after the close each day.) Standard deviation followed suit on the day of Lehman’s implosion on Monday, September 15. It’s interesting to see that in most cases, the vol warnings arrived before the market cracked. But the window of opportunity was small. Within days of the bankruptcy, stocks started tumbling, and the correction quickly turned into a crash.

The numbers above suggest that major declines don’t necessarily arrive without warning. Nonetheless, we shouldn’t be too smug. If you study these five vol indicators through time you’ll find instances of false signals. You’ll also discover that the earliest warnings come from different indicators at different times. In this case, Garch (1,1) happens to be the canary in the coal mine. But since we don’t know which one will shine first the next time, it’s prudent to monitor a variety of risk metrics.

But that raises additional questions since the more indicators we monitor, the greater the odds we’ll come across false signals. How to distinguish? For that matter, how should we choose among the many indicators at our disposal? The five listed above only scratch the surface of possibilities. Meantime, selecting model parameters demands more than a cursory round of trial and error for making informed decisions.

Clearly, there’s no shortage of work to do in the search of early warning signs of significant market risk. The analysis above is hardly the last word on such efforts, although it’s a reasonable start. On that note, all five indicators are currently signaling low risk for the S&P, based on the fact that these vol metrics (as defined above) are below the 40th percentile, according to data through yesterday (September 9, 2014).

The relatively calm waters could turn rough rather quickly, of course, and so it’s a good idea to crunch the numbers on a weekly or even daily basis. The future’s always uncertain, but the present has a habit at times of tipping us off about trouble down the road. But there’s a catch: we have to be looking, and it helps to be looking with a robust set of quantitative spectacles.

Copyright © Capital Spectator